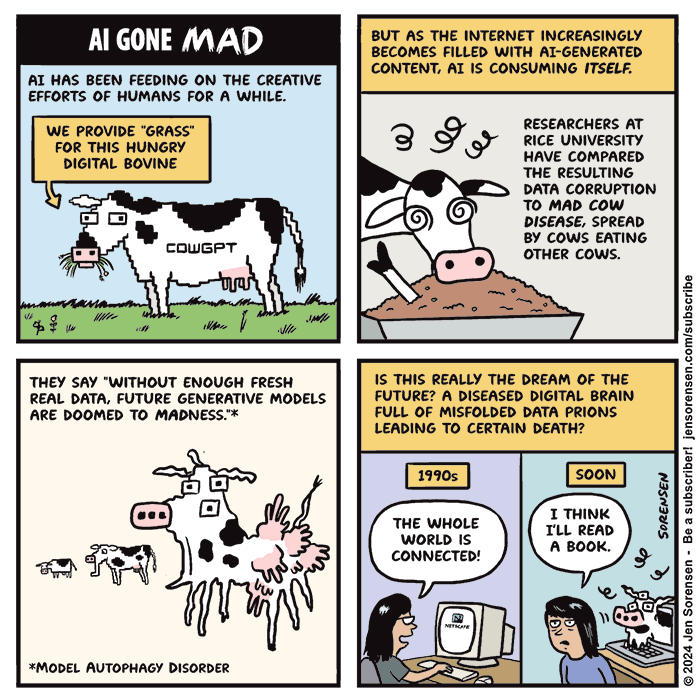

AI Gone MAD

This was inspired by an article about the Rice University study comparing self-consuming AI to mad cow disease, a topic practically begging for a cartoon.To quote one researcher:

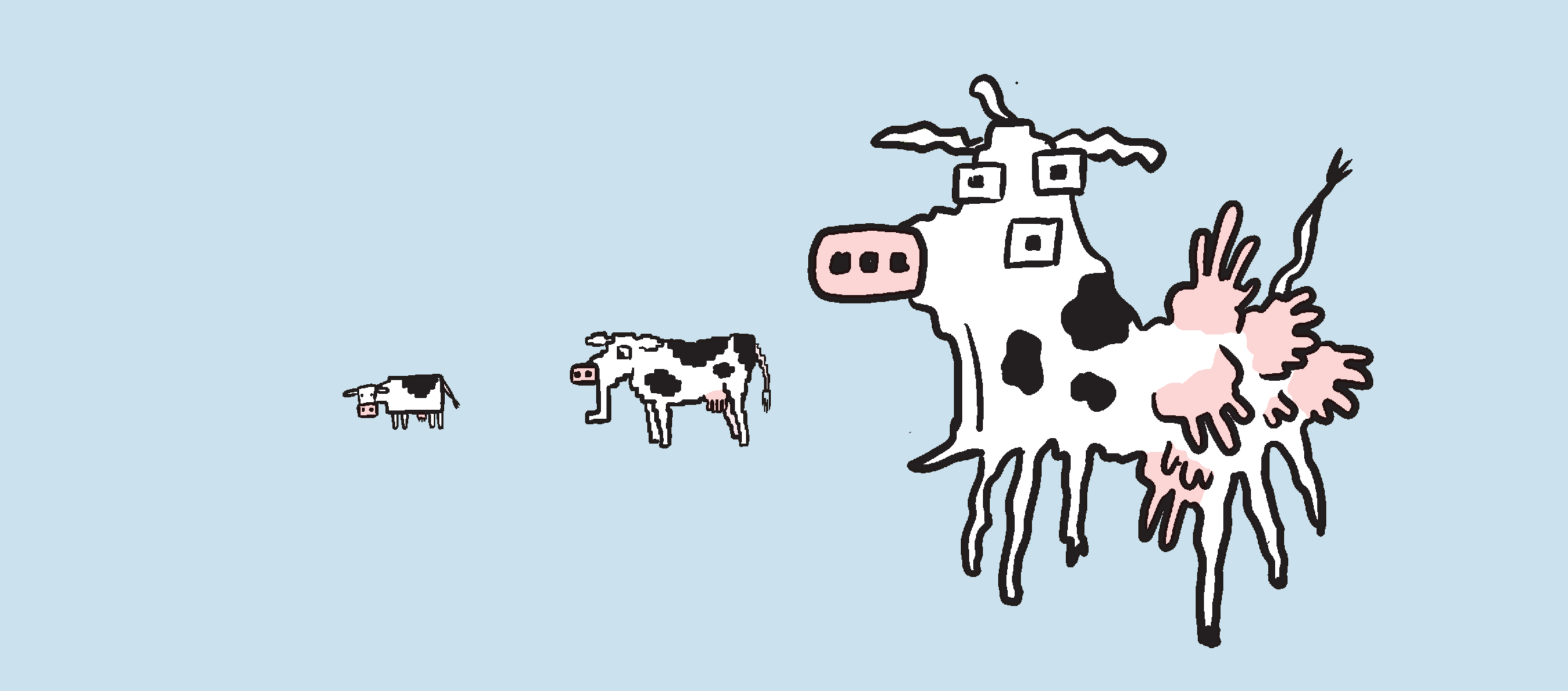

"The problems arise when this synthetic data training is, inevitably, repeated, forming a kind of a feedback loop -- what we call an autophagous or 'self-consuming' loop," said Richard Baraniuk, Rice's C. Sidney Burrus Professor of Electrical and Computer Engineering. "Our group has worked extensively on such feedback loops, and the bad news is that even after a few generations of such training, the new models can become irreparably corrupted. This has been termed 'model collapse' by some -- most recently by colleagues in the field in the context of large language models (LLMs). We, however, find the term 'Model Autophagy Disorder' (MAD) more apt, by analogy to mad cow disease."

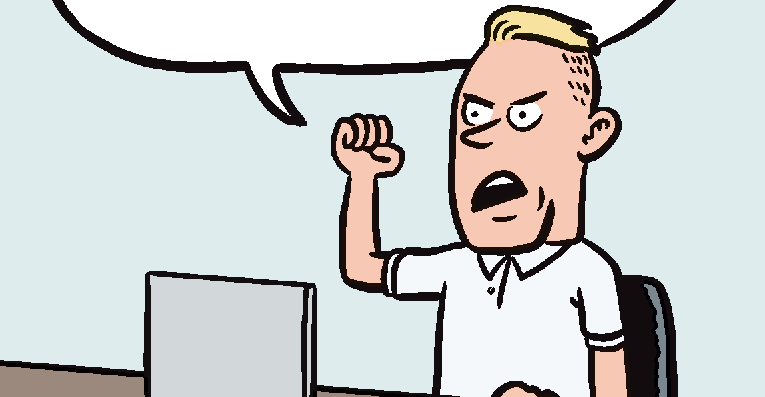

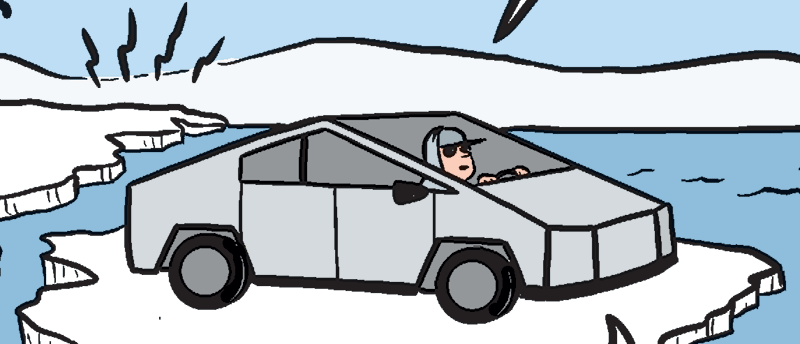

Baraniuk added that "one doomsday scenario is that if left uncontrolled for many generations, MAD could poison the data quality and diversity of the entire internet." This is not the quite the technological miracle we are being sold in the TV commercials. (I recently watched parts of the U.S. Open, and practically every ad was for AI, prescription drugs, or gross fast food, which struck me as a sort of dystopian trifecta.)

Personally, I've found it much harder to get useful search results from trustworthy sites whenever I do cartoon research. This all makes me nostalgic for the early, hopeful days of the web. I miss that Netscape Navigator feeling, the quiet thrill of discovering something cool that a real human being created just for the fun of it.

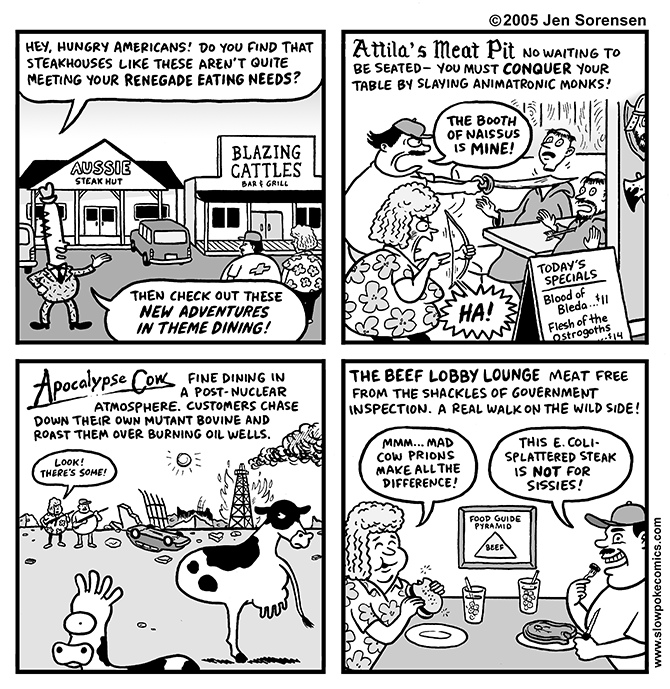

For this week's classic cartoon, I thought I would share this example of Other Devolved Cows I Have Drawn, from 2005. (Mad cow disease was more in the news at that time.)